Digital camera systems, incorporating a variety of charge-coupled device (CCD) detector configurations, are by far the most common image capture technology employed in modern optical microscopy. Until recently, specialized conventional film cameras were generally used to record images observed in the microscope. This traditional method, relying on the photon-sensitivity of silver-based photographic film, involves temporary storage of a latent image in the form of photochemical reaction sites in the exposed film, which only becomes visible in the film emulsion layers after chemical processing (development).

Digital cameras replace the sensitized film with a CCD photon detector, a thin silicon wafer divided into a geometrically regular array of thousands or millions of light-sensitive regions that capture and store image information in the form of localized electrical charge that varies with incident light intensity. The variable electronic signal associated with each picture element (pixel) of the detector is read out very rapidly as an intensity value for the corresponding image location, and following digitization of the values, the image can be reconstructed and displayed on a computer monitor virtually instantaneously.

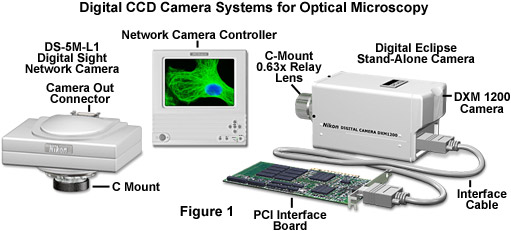

Several digital camera systems designed specifically for optical microscopy are illustrated in Figure 1. The Nikon Digital Eclipse DXM1200 provides high quality photo-realistic digital images at resolutions ranging up to 12 million pixels with low noise, superb color rendition, and high sensitivity. The camera is controlled by software that allows the microscopist a great deal of latitude in collecting, organizing, and correcting digital images. Live color monitoring on the supporting computer screen at 12 frames per second enables easy focusing of images, which can be saved with a choice of three formats: JPG, TIF, and BMP for greater versatility.

The DS-5M-L1 Digital Sight camera system (Figure 1) is Nikon's innovative digital imaging system for microscopy that emphasizes the ease and efficiency of an all-in-one concept, incorporating a built-in LCD monitor in a stand-alone control unit. The system optimizes the capture of high-resolution images up to 5 megapixels through straightforward menus and pre-programmed imaging modes for different observation methods. The stand-alone design offers the advantage of independent operation including image storage to a CompactFlash Card housed in the control/monitor unit, but has the versatility of full network capabilities if desired. Connection is possible to PCs through a USB interface, and to local area networks or the Internet via Ethernet port. Web browser support is available for live image viewing and remote camera control, and the camera control unit supports HTTP, Telnet, FTP server/client, and is DHCP compatible. The camera systems illustrated in Figure 1 represent the advanced technology currently available for digital imaging with the optical microscope.

Perhaps the single most significant advantage of digital image capture in optical microscopy, as exemplified by CCD camera systems, is the possibility for the microscopist to immediately determine whether a desired image has been successfully recorded. This capability is especially valuable considering the experimental complexities of many imaging situations and the transient nature of processes that are commonly investigated. Although the charge-coupled device detector functions in an equivalent role to that of film, it has a number of superior attributes for imaging in many applications. Scientific-grade CCD cameras exhibit extraordinary dynamic range, spatial resolution, spectral bandwidth, and acquisition speed. Considering the high light sensitivity and light collection efficiency of some CCD systems, a film speed rating of approximately ISO 100,000 would be required to produce images of comparable signal-to-noise ratio (SNR). The spatial resolution of current CCDs is similar to that of film, while their resolution of light intensity is one or two orders of magnitude better than that achieved by film or video cameras. Traditional photographic films exhibit no sensitivity at wavelengths exceeding 650 nanometers in contrast to high-performance CCD sensors, which often have significant quantum efficiency into the near infrared spectral region. The linear response of CCD cameras over a wide range of light intensities contributes to the superior performance, and gives such systems quantitative capabilities as imaging spectrophotometers.

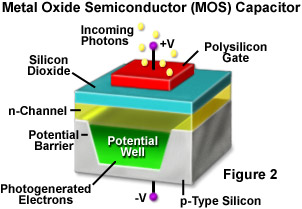

A CCD imager consists of a large number of light-sensing elements arranged in a two-dimensional array on a thin silicon substrate. The semiconductor properties of silicon allow the CCD chip to trap and hold photon-induced charge carriers under appropriate electrical bias conditions. Individual picture elements, or pixels, are defined in the silicon matrix by an orthogonal grid of narrow transparent current-carrying electrode strips, or gates, deposited on the chip. The fundamental light-sensing unit of the CCD is a metal oxide semiconductor (MOS) capacitor operated as a photodiode and storage device. A single MOS device of this type is illustrated in Figure 2, with reverse bias operation causing negatively charged electrons to migrate to an area underneath the positively charged gate electrode. Electrons liberated by photon interaction are stored in the depletion region up to the full well reservoir capacity. When multiple detector structures are assembled into a complete CCD, individual sensing elements in the array are segregated in one dimension by voltages applied to the surface electrodes and are electrically isolated from their neighbors in the other direction by insulating barriers, or channel stops, within the silicon substrate.

The light-sensing photodiode elements of the CCD respond to incident photons by absorbing much of their energy, resulting in liberation of electrons, and the formation of corresponding electron-deficient sites (holes) within the silicon crystal lattice. One electron-hole pair is generated from each absorbed photon, and the resulting charge that accumulates in each pixel is linearly proportional to the number of incident photons. External voltages applied to each pixel's electrodes control the storage and movement of charges accumulated during a specified time interval. Initially, each pixel in the sensor array functions as a potential well to store the charge during collection, and although either negatively charged electrons or positively charged holes can be accumulated (depending on the CCD design), the charge entities generated by incident light are usually referred to as photoelectrons. This discussion considers electrons to be the charge carriers. These photoelectrons can be accumulated and stored for long periods of time before being read from the chip by the camera electronics as one stage of the imaging process.

Image generation with a CCD camera can be divided into four primary stages or functions: charge generation through photon interaction with the device's photosensitive region, collection and storage of the liberated charge, charge transfer, and charge measurement. During the first stage, electrons and holes are generated in response to incident photons in the depletion region of the MOS capacitor structure, and liberated electrons migrate into a potential well formed beneath an adjacent positively-biased gate electrode. The system of aluminum or polysilicon surface gate electrodes overlie, but are separated from, charge carrying channels that are buried within a layer of insulating silicon dioxide placed between the gate structure and the silicon substrate. Utilization of polysilicon as an electrode material provides transparency to incident wavelengths longer than approximately 400 nanometers and increases the proportion of surface area of the device that is available for light collection. Electrons generated in the depletion region are initially collected into electrically positive potential wells associated with each pixel. During readout, the collected charge is subsequently shifted along the transfer channels under the influence of voltages applied to the gate structure. Figure 3 illustrates the electrode structure defining an individual CCD sense element.

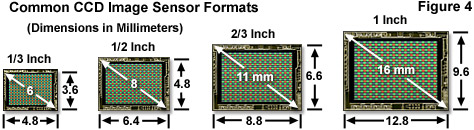

In general, the stored charge is linearly proportional to the light flux incident on a sensor pixel up to the capacity of the well; consequently this full-well capacity (FWC) determines the maximum signal that can be sensed in the pixel, and is a primary factor affecting the CCD's dynamic range. The charge capacity of a CCD potential well is largely a function of the physical size of the individual pixel. Since first introduced commercially, CCDs have typically been configured with square pixels assembled into rectangular area arrays, with an aspect ratio of 4:3 being most common. Figure 4 presents typical dimensions of several of the most common sensor formats in current use, with their size designations in inches according to a historical convention that relates CCD sizes to vidicon tube diameters.

CCD Formats

The rectangular geometry and common dimensions of CCDs result from their early competition with vidicon tube cameras, which required the solid-state sensors to produce an electronic signal output that conformed to the prevailing video standards at the time. Note that the "inch" designations do not correspond directly to any of the CCD dimensions, but represent the size of the rectangular area scanned in the corresponding round vidicon tube. A designated "1-inch" CCD has a diagonal of 16 millimeters and sensor dimensions of 9.6 x 12.8 millimeters, derived from the scanned area of a 1-inch vidicon tube with a 25.4-millimeter outside diameter and an input window approximately 18 millimeters in diameter. Unfortunately, this confusing nomenclature has persisted, often used in reference to CCD "type" rather than size, and even includes sensors classified by a combination of fractional and decimal terms, such as the widely used 1/1.8-inch CCD that is intermediate in size between 1/2-inch and 2/3-inch devices.

Although consumer cameras continue to primarily employ rectangular sensors built to one of the "standardized" size formats, it is becoming increasingly common for scientific-grade cameras to incorporate square sensor arrays, which better match the circular image field projected in the microscope. A large range of sensor array sizes are produced, and individual pixel dimensions vary widely in designs optimized for different performance parameters. CCDs in the common 2/3-inch format typically have arrays of 768 x 480 or more diodes and dimensions of 8.8 x 6.6 millimeters (11-millimeter diagonal). The maximum dimension represented by the diagonal of many sensor arrays is considerably smaller than the typical microscope field of view, and results in a highly magnified view of only a portion of the full field. The increased magnification can be beneficial in some applications, but if the reduced field of view is an impediment to imaging, demagnifying intermediate optical components are required. The alternative is use of a larger CCD that better matches the image field diameter, which ranges from 18 to 26 millimeters in typical microscope configurations.

An approximation of CCD potential-well storage capacity may be obtained by multiplying the diode (pixel) area by 1000. A number of consumer-grade 2/3-inch CCDs, with pixel sizes ranging from 7 to 13 micrometers in size, are capable of storing from 50,000 to 100,000 electrons. Using this approximation strategy, a diode with 10 x 10 micrometer dimensions will have a full-well capacity of approximately 100,000 electrons. For a given CCD size, the design choice regarding total number of pixels in the array, and consequently their dimensions, requires a compromise between spatial resolution and pixel charge capacity. A trend in current consumer devices toward maximizing pixel count and resolution has resulted in very small diode sizes, with some of the newer 2/3-inch sensors utilizing pixels less than 3 micrometers in size.

CCDs designed for scientific imaging have traditionally employed larger photodiodes than those intended for consumer (especially video-rate) and industrial applications. Because full-well capacity and dynamic range are direct functions of diode size, scientific-grade CCDs used in slow-scan imaging applications have typically employed diodes as large as 25 x 25 micrometers in order to maximize dynamic range, sensitivity, and signal-to-noise ratio. Many current high-performance scientific-grade cameras incorporate design improvements that have enabled use of large arrays having smaller pixels, which are capable of maintaining the optical resolution of the microscope at high frame rates. Large arrays of several million pixels in these improved designs can provide high-resolution images of the entire field of view, and by utilizing pixel binning (discussed below) and variable readout rate, deliver the higher sensitivity of larger pixels when necessary.

Readout of CCD Array Photoelectrons

Before stored charge from each sense element in a CCD can be measured to determine photon flux on that pixel, the charge must first be transferred to a readout node while maintaining the integrity of the charge packet. A fast and efficient charge-transfer process, as well as a rapid readout mechanism, are crucial to the function of CCDs as imaging devices. When a large number of MOS capacitors are placed close together to form a sensor array, charge is moved across the device by manipulating voltages on the capacitor gates in a pattern that causes charge to spill from one capacitor to the next, or from one row of capacitors to the next. The translation of charge within the silicon is effectively coupled to clocked voltage patterns applied to the overlying electrode structure, the basis of the term "charge-coupled" device. The CCD was initially conceived as a memory array, and intended to function as an electronic version of the magnetic bubble device. The charge transfer process scheme satisfies the critical requirement for memory devices of establishing a physical quantity that represents an information bit, and maintaining its integrity until readout. In a CCD used for imaging, an information bit is represented by a packet of charges derived from photon interaction. Because the CCD is a serial device, the charge packets are read out one at a time.

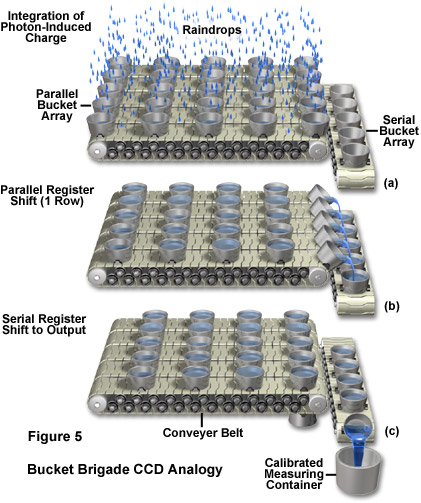

The stored charge accumulated within each CCD photodiode during a specified time interval, referred to as the integration time or exposure time, must be measured to determine the photon flux on that diode. Quantification of stored charge is accomplished by a combination of parallel and serial transfers that deliver each sensor element's charge packet, in sequence, to a single measuring node. The electrode network, or gate structure, built onto the CCD in a layer adjoining the sensor elements, constitutes the shift register for charge transfer. The basic charge transfer concept that enables serial readout from a two-dimensional diode array initially requires the entire array of individual charge packets from the imager surface, constituting the parallel register, to be simultaneously transferred by a single-row incremental shift. The charge-coupled shift of the entire parallel register moves the row of pixel charges nearest the register edge into a specialized single row of pixels along one edge of the chip referred to as the serial register. It is from this row that the charge packets are moved in sequence to an on-chip amplifier for measurement. After the serial register is emptied, it is refilled by another row-shift of the parallel register, and the cycle of parallel and serial shifts is repeated until the entire parallel register is emptied. Some CCD manufacturers utilize the terms vertical and horizontal in referring to the parallel and serial registers, respectively, although the latter terms are more readily associated with the function accomplished by each.

A widely used analogy to aid in visualizing the concept of serial readout of a CCD is the bucket brigade for rainfall measurement, in which rain intensity falling on an array of buckets may vary from place to place in similarity to incident photons on an imaging sensor (see Figure 5 (a)). The parallel register is represented by an array of buckets, which have collected various amounts of signal (water) during an integration period. The buckets are transported on a conveyor belt in stepwise fashion toward a row of empty buckets that represent the serial register, and which move on a second conveyor oriented perpendicularly to the first. In Figure 5(b), an entire row of buckets is being shifted in parallel into the reservoirs of the serial register. The serial shift and readout operations are illustrated in Figure 5(c), which depicts the accumulated rainwater in each bucket being transferred sequentially into a calibrated measuring container, analogous to the CCD output amplifier. When the contents of all containers on the serial conveyor have been measured in sequence, another parallel shift transfers contents of the next row of collecting buckets into the serial register containers, and the process repeats until the contents of every bucket (pixel) have been measured.

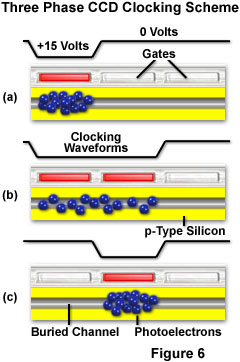

There are many designs in which MOS capacitors can be configured, and their gate voltages driven, to form a CCD imaging array. As described previously, gate electrodes are arranged in strips covering the entire imaging surface of the CCD face. The simplest and most common charge transfer configuration is the three-phase CCD design, in which each photodiode (pixel) is divided into thirds with three parallel potential wells defined by gate electrodes. In this design, every third gate is connected to the same clock driver circuit. The basic sense element in the CCD, corresponding to one pixel, consists of three gates connected to three separate clock drivers, termed phase-1, phase-2, and phase-3 clocks. Each sequence of three parallel gates makes up a single pixel's register, and the thousands of pixels covering the CCD's imaging surface constitute the device's parallel register. Once trapped in a potential well, electrons are moved across each pixel in a three-step process that shifts the charge packet from one pixel row to the next. A sequence of voltage changes applied to alternate electrodes of the parallel (vertical) gate structure move the potential wells and the trapped electrons under control of a parallel shift register clock.

The general clocking scheme employed in three-phase transfer begins with a charge integration step, in which two of the three parallel phases per pixel are set to a high bias value, producing a high-field region relative to the third gate, which is held at low or zero potential. For example, phases 1 and 2 may be designated collecting phases and held at higher electrostatic potential relative to phase 3, which serves as a barrier phase to separate charge being collected in the high-field phases of the adjacent pixel. Following charge integration, transfer begins by holding only the phase-1 gates at high potential so that charge generated in that phase will collect there, and charge generated in the phase-2 and phase-3 phases, now both at zero potential, rapidly diffuses into the potential well under phase 1. Figure 3 illustrates the electrode structure defining each pixel of a three-phase CCD, and depicts electrons accumulating in the potential well underlying the phase-1 electrode, which is being held at a positive voltage (labeled +V). Charge transfer progresses with an appropriately timed sequence of voltages being applied to the gates in order to cause potential wells and barriers to migrate across each pixel.

At each transfer step, the voltage coupled to the well ahead of the charge packet is made positive while the electron-containing well is made negative or set to zero (ground), forcing the accumulated electrons to advance to the next phase. Rather than utilizing abrupt voltage transitions in the clocking sequence, the applied voltage changes on adjacent phases are gradual and overlap in order to ensure the most efficient charge transfer. The transition to phase 2 is carried out by applying positive potential to the phase-2 gates, spreading the collected charge between the phase-1 and phase-2 wells, and when the phase-1 potential is returned to ground, the entire charge packet is forced into phase 2. A similar sequence of timed voltage transitions, under control of the parallel shift register clock, is employed to shift the charge from phase 2 to phase 3, and the process continues until an entire single-pixel shift has been completed. One three-phase clock cycle applied to the entire parallel register results in a single-row shift of the entire array. An important factor in three-phase transfer is that a potential barrier is always maintained between adjacent pixel charge packets, which allows the one-to-one spatial correspondence between sensor and display pixels to be maintained throughout the image capture sequence.

Figure 6 illustrates the sequence of operations just described for charge transfer in a three-phase CCD, as well as the clocking sequence for drive pulses supplied by the parallel shift register clock to accomplish the transfer. In this schematic visualization of the pixel, charge is depicted being transferred from left to right by clocking signals that simultaneously decrease the voltage on the positively-biased electrode (defining a potential well) and increase it on the electrode to the right (Figures 6(a) and 6(b)). In the last of the three steps (Figure 6(c)), charge has been completely transferred from one gate electrode to the next. Note that the rising and falling phases of the clock drive pulses are timed to overlap slightly (not illustrated) in order to more efficiently transfer charge and to minimize the possibility of charge loss during the shift.

With each complete parallel transfer, charge packets from an entire pixel row are moved into the serial register where they can be sequentially shifted toward the output amplifier, as illustrated in the bucket brigade analogy (Figure 5(c)). This horizontal (serial) transfer utilizes the same three-phase charge-coupling mechanism as the vertical row-shift, with timing control provided in this case by signals from the serial shift register clock. After all pixels are transferred from the serial register for readout, the parallel register clock provides the time signals for shifting the next row of trapped photoelectrons into the serial register. Each charge packet in the serial register is delivered to the CCD's output node where it is detected and read by an output amplifier (sometimes referred to as the on-chip preamplifier) that converts the charge into a proportional voltage. The voltage output of the amplifier represents the signal magnitude produced by successive photodiodes, as read out in sequence from left to right in each row and from the top row to the bottom over the entire two-dimensional array. The CCD output at this stage is, therefore, an analog voltage signal equivalent to a raster scan of accumulated charge over the imaging surface of the device.

After the output amplifier fulfills its function of magnifying a charge packet and converting it to a proportional voltage, the signal is transmitted to an analog-to-digital converter (ADC), which converts the voltage value into the 0 and 1 binary code necessary for interpretation by the computer. Each pixel is assigned a digital value corresponding to signal amplitude, in steps sized according to the resolution, or bit depth, of the ADC. For example, an ADC capable of 12-bit resolution assigns each pixel a value ranging from 0 to 4095, representing 4096 possible image gray levels (2 to the 12th power is equal to 4096 digitizer steps). Each gray-level step is termed an analog-to-digital unit (ADU).

The technological sophistication of current CCD imaging systems is remarkable considering the large number of operations required to capture a digital image, and the accuracy and speed with which the process is accomplished. The sequence of events required to capture a single image with a full-frame CCD camera system can be summarized as follows:

-

Camera shutter is opened to begin accumulation of photoelectrons, with the gate electrodes biased appropriately for charge collection.

-

At the end of the integration period, the shutter is closed and accumulated charge in pixels is shifted row by row across the parallel register under control of clock signals from the camera electronics. Rows of charge packets are transferred in sequence from one edge of the parallel register into the serial shift register.

-

Charge contents of pixels in the serial register are transferred one pixel at a time into an output node to be read by an on-chip amplifier, which boosts the electron signal and converts it into an analog voltage output.

-

An ADC assigns a digital value for each pixel according to its voltage amplitude.

-

Each pixel value is stored in computer memory or in a camera frame buffer.

-

The serial readout process is repeated until all pixel rows of the parallel register are emptied, which is commonly 1000 or more rows for high-resolution cameras.

-

The complete image file in memory, which may be several megabytes in size, is displayed in a suitable format on the computer monitor for visual evaluation.

-

The CCD is cleared of residual charge prior to the next exposure by executing the full readout cycle except for the digitization step.

In spite of the large number of operations performed, more than one million pixels can be transferred across the chip, assigned a gray-scale value with 12-bit resolution, stored in computer memory, and displayed in less than one second. A typical total time requirement for readout and image display is approximately 0.5 second for a 1-megapixel camera operating at a 5-MHz digitization rate. Charge transfer efficiency can also be extremely high for cooled-CCD cameras, with minimal loss of charge occurring, even with the thousands of transfers required for pixels in regions of the array that are farthest from the output amplifier.

CCD Image Sensor Architecture

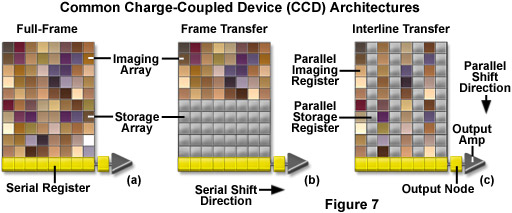

Three basic variations of CCD architecture are in common use for imaging systems: full frame, frame transfer, and interline transfer (see Figure 7). The full-frame CCD, as referred to in the previous description of readout procedure, has the advantage of nearly 100-percent of its surface being photosensitive, with virtually no dead space between pixels. The imaging surface must be protected from incident light during readout of the CCD, and for this reason, an electromechanical shutter is usually employed for controlling exposures. Charge accumulated with the shutter open is subsequently transferred and read out after the shutter is closed, and because the two steps cannot occur simultaneously, image frame rates are limited by the mechanical shutter speed, the charge-transfer rate, and readout steps. Although full-frame devices have the largest photosensitive area of the CCD types, they are most useful with specimens having high intra-scene dynamic range, and in applications that do not require time resolution of less than approximately one second. When operated in a subarray mode (in which a reduced portion of the full pixel array is read out) in order to accelerate readout, the fastest frame rates possible are on the order of 10 frames per second, limited by the mechanical shutter.

Frame-transfer CCDs can operate at faster frame rates than full-frame devices because exposure and readout can occur simultaneously with various degrees of overlap in timing. They are similar to full-frame devices in structure of the parallel register, but one-half of the rectangular pixel array is covered by an opaque mask, and is used as a storage buffer for photoelectrons gathered by the unmasked light-sensitive portion. Following image exposure, charge accumulated in the photosensitive pixels is rapidly shifted to pixels on the storage side of the chip, typically within approximately 1 millisecond. Because the storage pixels are protected from light exposure by an aluminum or similar opaque coating, stored charge in that portion of the sensor can be systematically read out at a slower, more efficient rate while the next image is simultaneously being exposed on the photosensitive side of the chip. A camera shutter is not necessary because the time required for charge transfer from the image area to the storage area of the chip is only a fraction of the time needed for a typical exposure. Because cameras utilizing frame-transfer CCDs can be operated continuously at high frame rates without mechanical shuttering, they are suitable for investigating rapid kinetic processes by methods such as dye ratio imaging, in which high spatial resolution and dynamic range are important. A disadvantage of this sensor type is that only one-half of the surface area of the CCD is used for imaging, and consequently, a much larger chip is required than for a full-frame device with an equivalent-size imaging array, adding to the cost and imposing constraints on the physical camera design.

In the interline-transfer CCD design, columns of active imaging pixels and masked storage-transfer pixels alternate over the entire parallel register array. Because a charge-transfer channel is located immediately adjacent to each photosensitive pixel column, stored charge must only be shifted one column into a transfer channel. This single transfer step can be performed in less than 1 millisecond, after which the storage array is read out by a series of parallel shifts into the serial register while the image array is being exposed for the next image. The interline-transfer architecture allows very short integration periods through electronic control of exposure intervals, and in place of a mechanical shutter, the array can be rendered effectively light-insensitive by discarding accumulated charge rather than shifting it to the transfer channels. Although interline-transfer sensors allow video-rate readout and high-quality images of brightly illuminated subjects, basic forms of earlier devices suffered from reduced dynamic range, resolution, and sensitivity, due to the fact that approximately 75 percent of the CCD surface is occupied by the storage-transfer channels.

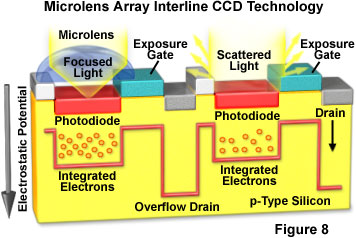

Although earlier interline-transfer CCDs, such as those used in video camcorders, offered high readout speed and rapid frame rates without the necessity of shutters, they did not provide adequate performance for low-light high-resolution applications in microscopy. In addition to the reduction in light-sensitivity attributable to the alternating columns of imaging and storage-transfer regions, rapid readout rates led to higher camera read noise and reduced dynamic range in earlier interline-transfer imagers. Improvements in sensor design and camera electronics have completely changed the situation to the extent that current interline devices provide superior performance for digital microscopy cameras, including those used for low-light applications such as recording small concentrations of fluorescent molecules. Adherent microlenses, aligned on the CCD surface to cover pairs of image and storage pixels, collect light that would normally be lost on the masked pixels and focus it on the light-sensitive pixels (see Figure 8). By combining small pixel size with microlens technology, interline sensors are capable of delivering spatial resolution and light-collection efficiency comparable to full-frame and frame-transfer CCDs. The effective photosensitive area of interline sensors utilizing on-chip microlenses is increased to 75-90 percent of the surface area.

An additional benefit of incorporating microlenses in the CCD structure is that the spectral sensitivity of the sensor can be extended into the blue and ultraviolet wavelength regions, providing enhanced utility for shorter-wavelength applications, such as popular fluorescence techniques employing green fluorescent protein (GFP) and dyes excited by ultraviolet light. In order to increase quantum efficiency across the visible spectrum, recent high-performance chips incorporate gate structures composed of materials such as indium tin oxide, which have much higher transparency in the blue-green spectral region. Such nonabsorbing gate structures result in quantum efficiency values approaching 80 percent for green light.

The past limitation of reduced dynamic range for interline-transfer CCDs has largely been overcome by improved electronic technology that has lowered camera read noise by approximately one-half. Because the active pixel area of interline CCDs is approximately one-third that of comparable full-frame devices, the full well capacity (a function of pixel area) is similarly reduced. Previously, this factor, combined with relatively high camera read noise, resulted in insufficient signal dynamic range to support more than 8 or 10-bit digitization. High-performance interline cameras now operate with read noise values as low as 4 to 6 electrons, resulting in dynamic range performance equivalent to that of 12-bit cameras employing full-frame CCDs. Additional improvements in chip design factors such as clocking schemes, and in camera electronics, have enabled increased readout rates. Interline-transfer CCDs now enable 12-bit megapixel images to be acquired at 20-megahertz rates, approximately 4 times the rate of full-frame cameras with comparable array sizes. Other technological improvements, including modifications of the semiconductor composition, are incorporated in some interline-transfer CCDs to improve quantum efficiency in the near-infrared portion of the spectrum.

CCD Detector Imaging Performance

Several camera operation parameters that modify the readout stage of image acquisition have an impact on image quality. The readout rate of most scientific-grade CCD cameras is adjustable, and typically ranges from approximately 0.1 MHz to 10 or 20 MHz. The maximum achievable rate is a function of the processing speed of the ADC and other camera electronics, which reflect the time required to digitize a single pixel. Applications aimed at tracking rapid kinetic processes require fast readout and frame rates in order to achieve adequate temporal resolution, and in certain situations, a video rate of 30 frames per second or higher is necessary. Unfortunately, of the various noise components that are always present in an electronic image, read noise is a major source, and high readout rates increase the noise level. Whenever the highest temporal resolution is not required, better images of specimens that produce low pixel intensity values can be obtained at slower readout rates, which minimize noise and maintain adequate signal-to-noise ratio. When dynamic processes require rapid imaging frame rates, the normal CCD readout sequence can be modified to reduce the number of charge packets processed, enabling acquisition rates of hundreds of frames per second in some cases. This increased frame rate can be accomplished by combining pixels during CCD readout and/or by reading out only a portion of the detector array, as described below.

The image acquisition software of most CCD camera systems used in optical microscopy allows the user to define a smaller subset, or subarray, of the entire pixel array to be designated for image capture and display. By selecting a reduced portion of the image field for processing, unselected pixels are discarded without being digitized by the ADC, and readout speed is correspondingly increased. Depending upon the camera control software employed, a subarray may be chosen from pre-defined array sizes, or designated interactively as a region of interest using the computer mouse and the monitor display. The subarray readout technique is commonly utilized for acquiring sequences of time-lapse images, in order to produce smaller and more manageable image files.

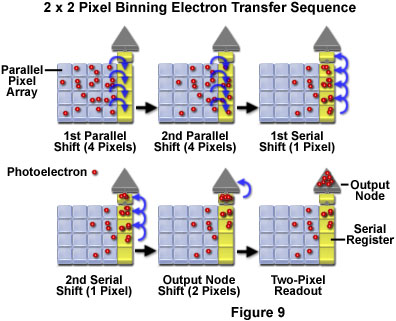

Accumulated charge packets from adjacent pixels in the CCD array can be combined during readout to form a reduced number of superpixels. This process is referred to as pixel binning, and is performed in the parallel register by clocking two or more row shifts into the serial register prior to executing the serial shift and readout sequence. The binning process is usually repeated in the serial register by clocking multiple shifts into the readout node before the charge is read by the output amplifier. Any combination of parallel and serial shifts can be combined, but typically a symmetrical matrix of pixels are combined to form each single superpixel (see Figure 9). As an example, 3 x 3 binning is accomplished by initially performing 3 parallel shifts of rows into the serial register (prior to serial transfer), at which point each pixel in the serial register contains the combined charge from 3 pixels, which were neighbors in adjacent parallel rows. Subsequently, 3 serial-shift steps are performed into the output node before the charge is measured. The resulting charge packet is processed as a single pixel, but contains the combined photoelectron content of 9 physical pixels (a 3 x 3 superpixel). Although binning reduces spatial resolution, the procedure often allows image acquisition under circumstances that make imaging impossible with normal CCD readout. It allows higher frame rates for image sequences if the acquisition rate is limited by the camera read cycle, as well as providing improved signal-to-noise ratio for equivalent exposure times. Additional advantages include shorter exposure times to produce the same image brightness (highly important for live cell imaging), and smaller image file sizes, which reduces computer storage demands and speeds image processing.

A third camera acquisition factor, which can affect image quality because it modifies the CCD readout process, is the electronic gain of the camera system. The gain adjustment of a digital CCD camera system defines the number of accumulated photoelectrons that determine each gray level step distinguished by the readout electronics, and is typically applied at the analog-to-digital conversion step. An increase in electronic gain corresponds to a decrease in the number of photoelectrons that are assigned per gray level (electrons/ADU), and allows a given signal level to be divided into a larger number of gray level steps. Note that this differs from gain adjustments applied to photomultiplier tubes or vidicon tubes, in which the varying signal is amplified by a fixed multiplication factor. Although electronic gain adjustment does provide a method to expand a limited signal amplitude to a desired large number of gray levels, if it is used excessively, the small number of electrons that distinguish adjacent gray levels can lead to digitization errors. High gain settings can result in noise due to the inaccurate digitization, which appears as graininess in the final image. If a reduction in exposure time is desired, an increase in electronic gain will allow maintaining a fixed large number of gray scale steps, in spite of the reduced signal level, providing that the applied gain does not produce excessive image deterioration. As an example of the effect of different gain factors applied to a constant signal level, an initial gain setting that assigns 8 electrons per ADU (gray level) dictates that a pixel signal consisting of 8000 electrons will be displayed at 1000 gray levels. By increasing the gain through application of a 4x gain factor to the base setting, the number of electrons per gray level is reduced to 2 (2 electrons/ADU), and 4000 gray levels are distinguished by the digitizing electronics.

Digital image quality can be assessed in terms of four quantifiable criteria that are determined in part by the CCD design, but which also reflect the implementation of the previously described camera operation variables that directly affect the imaging performance of the CCD detector. The principal image quality criteria and their effects are summarized as follows:

-

Spatial Resolution: Determines the ability to capture fine specimen details without pixels being visible in the image.

-

Light-Intensity Resolution: Defines the dynamic range or number of gray levels that are distinguishable in the displayed image.

-

Time Resolution: The sampling (frame) rate determines the ability to follow live specimen movement or rapid kinetic processes.

-

Signal-to-Noise Ratio: Determines the visibility and clarity of specimen signals relative to the image background.

In microscope imaging, it is common that not all important image quality criteria can be simultaneously optimized in a single image, or image sequence. Obtaining the best images within the constraints imposed by a particular specimen or experiment typically requires a compromise among the criteria listed, which often exert contradictory demands. For example, capturing time-lapse sequences of live fluorescently-labeled specimens may require reducing the total exposure time to minimize photobleaching and phototoxicity. Several methods can be utilized to accomplish this, although each involves a degradation of some aspect of imaging performance. If the specimen is exposed less frequently, temporal resolution is reduced; applying pixel binning to allow shorter exposures reduces spatial resolution; and increasing electronic gain compromises dynamic range and signal-to-noise ratio. Different situations often require completely different imaging rationales for optimum results. In contrast to the previous example, in order to maximize dynamic range in a single image of a specimen that requires a short exposure time, the application of binning or a gain increase may accomplish the goal without significant negative effects on the image. Performing efficient digital imaging requires the microscopist to be completely familiar with the crucial image quality criteria, and the practical aspects of balancing camera acquisition parameters to maximize the most significant factors in a particular situation.

A small number of CCD performance factors and camera operational parameters dominate the major aspects of digital image quality in microscopy, and their effects overlap to a great extent. Factors that are most significant in the context of practical CCD camera use, and discussed further in the following sections, include detector noise sources and signal-to-noise ratio, frame rate and temporal resolution, pixel size and spatial resolution, spectral range and quantum efficiency, and dynamic range.

CCD Camera Noise Sources

Camera sensitivity, in terms of the minimum detectable signal, is determined by both the photon statistical (shot) noise and electronic noise arising in the CCD. A conservative estimation is that a signal can only be discriminated from accompanying noise if it exceeds the noise by a factor of approximately 2.7 (SNR of 2.7). The minimum signal that can theoretically yield a given SNR value is determined by random variations of the photon flux, an inherent noise source associated with the signal, even with an ideal noiseless detector. This photon statistical noise is equal to the square root of the number of signal photons, and since it cannot be eliminated, it determines the maximum achievable SNR for a noise-free detector. The signal/noise ratio is therefore given by the signal level, S, divided by the square-root of the signal (S(1/2)), and is equal to the square-root of S. If a SNR value of 2.7 is required for discriminating signal from noise, a signal level of 8 photons is the minimum theoretically detectable light flux.

In practice, other noise components, which are not associated with the specimen photon signal, are contributed by the CCD and camera system electronics, and add to the inherent photon statistical noise. Once accumulated in collection wells, charge arising from noise sources cannot be distinguished from photon-derived signal. Most of the system noise results from readout amplifier noise and thermal electron generation in the silicon of the detector chip. The thermal noise is attributable to kinetic vibrations of silicon atoms in the CCD substrate that liberate electrons or holes even when the device is in total darkness, and which subsequently accumulate in the potential wells. For this reason, the noise is referred to as dark noise, and represents the uncertainty in the magnitude of dark charge accumulation during a specified time interval. The rate of generation of dark charge, termed dark current, is unrelated to photon-induced signal but is highly temperature dependent. In similarity to photon noise, dark noise follows a statistical (square-root) relationship to dark current, and therefore it cannot simply be subtracted from the signal. Cooling the CCD reduces dark charge accumulation by an order of magnitude for every 20-degree Celsius temperature decrease, and high-performance cameras are usually cooled during use. Cooling even to 0 degrees is highly advantageous, and at -30 degrees, dark noise is reduced to a negligible value for nearly any microscopy application.

Providing that the CCD is cooled, the remaining major electronic noise component is read noise, primarily originating with the on-chip preamplifier during the process of converting charge carriers into a voltage signal. Although the read noise is added uniformly to every pixel of the detector, its magnitude cannot be precisely determined, but only approximated by an average value, in units of electrons (root-mean-square or rms) per pixel. Some types of readout amplifier noise are frequency dependent, and in general, read noise increases with the speed of measurement of the charge in each pixel. The increase in noise at high readout and frame rates is partially a result of the greater amplifier bandwidth required at higher pixel clock rates. Cooling the CCD reduces the readout amplifier noise to some extent, although not to an insignificant level. A number of design enhancements are incorporated in current high-performance camera systems that greatly reduce the significance of read noise, however. One strategy for achieving high readout and frame rates without increasing noise is to electrically divide the CCD into two or more segments in order to shift charge in the parallel register toward multiple output amplifiers located at opposite edges or corners of the chip. This procedure allows charge to be read out from the array at a greater overall speed without excessively increasing the read rate (and noise) of the individual amplifiers.

Cooling the CCD in order to reduce dark noise provides the additional advantage of improving the charge transfer efficiency (CTE) of the device. This performance factor has become increasingly important due to the large pixel-array sizes employed in many current CCD imagers, as well as the faster readout rates required for investigations of rapid dynamic processes. With each shift of a charge packet along the transfer channels during the CCD readout process, a small portion may be left behind. While individual transfer losses at each pixel are miniscule in most cases, the large number of transfers required, especially in megapixel sensors, can result in significant losses for pixels at the greatest distance from the CCD readout amplifier(s) unless the charge transfer efficiency is extremely high. The occurrence of incomplete charge transfer can lead to image blurring due to the intermixing of charge from adjacent pixels. In addition, cumulative charge loss at each pixel transfer, particularly with large arrays, can result in the phenomenon of image shading, in which regions of images farthest away from the CCD output amplifier appear dimmer than those adjacent to the serial register. Charge transfer efficiency values in cooled CCDs can be 0.9999 or greater, and while CTEs this high are usually negligible in image effect, values lower than 0.999 are likely to produce shading.

Both hardware and software methods are available to compensate for image intensity shading. A software correction is implemented by capturing an image of a uniform-intensity field, which is then utilized by the imaging system to generate a pixel-by-pixel correction map that can be applied to subsequent specimen images to eliminate nonuniformity due to shading. Software correction techniques are generally satisfactory in systems that do not require correction factors greater than approximately 10-20 percent of the local intensity. Larger corrections, up to approximately fivefold, can be handled by hardware methods through the adjustment of gain factors for individual pixel rows. The required gain adjustment is determined by sampling signal intensities in five or six masked reference pixels located outside the image area at the end of each pixel row. Voltage values obtained from the columns of reference pixels at the parallel register edge serve as controls for charge transfer loss, and produce correction factors for each pixel row that are applied to voltages obtained from that row during readout. Correction factors are large in regions of some sensors, such as areas distant from the output amplifier in video-rate cameras, and noise levels may be substantially increased for these image areas. Although the hardware correction process removes shading effects without apparent signal reduction, it should be realized that the resulting signal-to-noise ratio is not uniform over the entire image.

Spatial and Temporal Resolution in CCD Image Sensors

In many applications, an image capture system capable of providing high temporal resolution is a primary requirement. For example, if the kinetics of a process being studied necessitates video-rate imaging at moderate resolution, a camera capable of delivering superb resolution is, nevertheless, of no benefit if it only provides that performance at slow-scan rates, and performs marginally or not at all at high frame rates. Full-frame slow-scan cameras do not deliver high resolution at video rates, requiring approximately one second per frame for a large pixel array, depending upon the digitization rate of the electronics. If specimen signal brightness is sufficiently high to allow short exposure times (on the order of 10 milliseconds), the use of binning and subarray selection makes it possible to acquire about 10 frames per second at reduced resolution and frame size with cameras having electromechanical shutters. Faster frame rates generally necessitate the use of interline-transfer or frame-transfer cameras, which do not require shutters and typically can also operate at higher digitization rates. The latest generation of high-performance cameras of this design can capture full-frame 12-bit images at near video rates.

The now-excellent spatial resolution of CCD imaging systems is coupled directly to pixel size, and has improved consistently due to technological improvements that have allowed CCD pixels to be made increasingly smaller while maintaining other performance characteristics of the imagers. In comparison to typical film grain sizes (approximately 10 micrometers), the pixels of many CCD cameras employed in biological microscopy are smaller and provide more than adequate resolution when coupled with commonly used high-magnification objectives that project relatively large-radii diffraction (Airy) disks onto the CCD surface. Interline-transfer scientific-grade CCD cameras are now available having pixels smaller than 5 micrometers, making them suitable for high-resolution imaging even with low-magnification objectives. The relationship of detector element size to relevant optical resolution criteria is an important consideration in choosing a digital camera if the spatial resolution of the optical system is to be maintained.

The Nyquist sampling criterion is commonly utilized to determine the adequacy of detector pixel size with regard to the resolution capabilities of the microscope optics. Nyquist's theorem specifies that the smallest diffraction disk radius produced by the optical system must be sampled by at least two pixels in the imaging array in order to preserve the optical resolution and avoid aliasing. As an example, consider a CCD having pixel dimensions of 6.8 x 6.8 micrometers, coupled with a 100x, 1.3 numerical aperture objective, which produces a 26-micrometer (radius) diffraction spot at the plane of the detector. Excellent resolution is possible with this detector-objective combination, because the diffraction disk radius covers approximately a 4-pixel span (26 / 6.8 = 3.8 pixels) on the detector array, or nearly twice the Nyquist limiting criterion. At this sampling frequency, sufficient margin is available that the Nyquist criterion is nearly satisfied even with 2 x 2 pixel binning.

Image Sensor Quantum Efficiency

Detector quantum efficiency (QE) is a measure of the likelihood that a photon having a particular wavelength will be captured in the active region of the device to enable liberation of charge carriers. The parameter represents the effectiveness of a CCD imager in generating charge from incident photons, and is therefore a major determinant of the minimum detectable signal for a camera system, particularly when performing low-light-level imaging. No charge is generated if a photon never reaches the semiconductor depletion layer or if it passes completely through without transfer of significant energy. The nature of interaction between a photon and the detector depends upon the photon's energy and corresponding wavelength, and is directly related to the detector's spectral sensitivity range. Although conventional front-illuminated CCD detectors are highly sensitive and efficient, none have 100-percent quantum efficiencies at any wavelength.

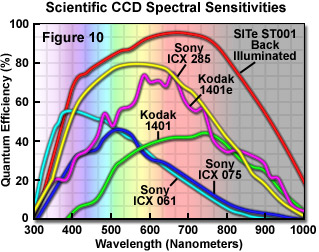

Image sensors typically employed in fluorescence microscopy can detect photons within the spectral range of 400-1100 nanometers, with peak sensitivity normally in the range of 550-800 nanometers. Maximum QE values are only about 40-50 percent, except in the newest designs, which may reach 80 percent efficiency. Figure 10 illustrates the spectral sensitivity of a number of popular CCDs in a graph that plots quantum efficiency as a function of incident light wavelength. Most CCDs used in scientific imaging are of the interline-transfer type, and because the interline mask severely limits the photosensitive surface area, many older versions exhibit very low QE values. With the advent of the surface microlens technology to direct more incident light to the photosensitive regions between transfer channels, newer interline sensors are much more efficient and many have quantum efficiency values of 60-70 percent.

Sensor spectral range and quantum efficiency are further enhanced in the ultraviolet, visible, and near-infrared wavelength regions by various additional design strategies in several high-performance CCDs. Because aluminum surface transfer gates absorb or reflect much of the blue and ultraviolet wavelengths, many newer designs employ other materials, such as indium-tin oxide, to improve transmission and quantum efficiency over a broader spectral range. Even higher QE values can be obtained with specialized back-thinned CCDs, which are constructed to allow illumination from the rear side, avoiding the surface electrode structure entirely. To make this possible, most of the silicon substrate is removed by etching, and although the resulting device is delicate and relatively expensive, quantum efficiencies of approximately 90 percent can routinely be achieved.

Other surface treatments and construction materials may be utilized to gain additional spectral-range benefits. Performance of back-thinned CCDs in the ultraviolet wavelength region is enhanced by the application of specialized antireflection coatings. Modified semiconductor materials are used in some detectors to improve quantum efficiency in the near-infrared. Sensitivity to wavelengths outside the normal spectral range of conventional front-illuminated CCDs can be achieved by the application of wavelength-conversion phosphors to the detector face. Phosphors for this purpose are chosen to absorb photon energy in the spectral region of interest and emit light within the spectral sensitivity region of the CCD. As an example of this strategy, if a specimen or fluorophore of interest emits light at 300 nanometers (where sensitivity of any CCD is minimal), a conversion phosphor can be employed on the detector surface that absorbs efficiently at 300 nanometers and emits at 560 nanometers, within the peak sensitivity range of the CCD.

Dynamic Range

A term referred to as the dynamic range of a CCD detector expresses the maximum signal intensity variation that can be quantified by the sensor. The quantity is specified numerically by most CCD camera manufacturers as the ratio of pixel full well capacity (FWC) to the read noise, with the rationale that this value represents the limiting condition in which intrascene brightness ranges from regions that are just at pixel saturation level to regions that are barely lost in noise. The sensor dynamic range determines the maximum number of resolvable gray-level steps into which the detected signal can be divided. To take full advantage of a CCD's dynamic range, it is appropriate to match the analog-to-digital converter's bit depth to the dynamic range in order to allow discrimination of as many gray scale steps as possible. For example, a camera with a 16,000-electron FWC and readout noise of 10 electrons, has a dynamic range of 1600, which supports between 10 and 11-bit A/D conversion. Analog-to-digital converters with bit depths of 10 and 11 are capable of discriminating 1024 and 2048 gray levels, respectively. As stated previously, because a computer bit can only assume one of two possible states, the number of intensity steps that can be encoded by a digital processor (ADC) reflects its resolution (bit depth), and is equal to 2 raised to the value of the bit depth specification. Therefore, 8, 10, 12, and 14-bit processors can encode a maximum of 256, 1024, 4096, or 16384 gray levels.

Specifying dynamic range as the ratio of full well capacity to read noise is not necessarily a realistic measure of useful dynamic range, but is valuable for comparing sensors. In practice, useful dynamic range is smaller both because CCD response becomes nonlinear before full well capacity is reached and because a signal level equal to read noise is unacceptable visually and virtually useless for quantitative purposes. Note that the maximum dynamic range is not equivalent to the maximum possible signal-to-noise ratio, although the SNR is also a function of full well capacity. The photon statistical noise associated with the maximum possible signal, or FWC, is the square root of the FWC value, or 126 electrons, for the previous example of a 16,000-electron FWC. The maximum signal-to-noise ratio is therefore equal to the maximum signal divided by noise (16,000/126), or 126, the square root of the signal itself. The photon noise represents the minimum intrinsic noise level, and both detected stray light and electronic (system) noise diminish the maximum SNR that can be realized in practice to values below 126, since these sources reduce the effective FWC by adding charge that is not signal to the wells.

Although a manufacturer might typically equip a camera having a dynamic range of approximately 4000, for example, with a 12-bit ADC (4096 digitization steps), several factors are relevant in considering the match between sensor dynamic range and the digitizing capacity of the processor. For some of the latest interline-transfer CCD cameras that provide 12-bit digitization, the dynamic range determined from the FWC and read noise is approximately 2000, which would not normally require 12-bit processing. However, a number of the current designs include an option for setting gain at 0.5x, allowing full utilization of 12-bit resolution. This strategy takes advantage of the fact that pixels of the serial register are designed to have twice the electron capacity of parallel register pixels, and when the camera is operated in 2 x 2 binning mode (common in fluorescence microscopy), 12-bit high-quality images can be obtained.

It is important to be aware of the various mechanisms in which electronic gain can be manipulated to utilize the available bit depth of the processor, and when dynamic range of different cameras is being compared, the best approach is to calculate the value from the pixel full well capacity and camera read noise. It is common to see camera systems equipped with processing electronics having much higher digitizing resolution than required by the inherent dynamic range of the camera. In such a system, operation at the conventional 1x electronic gain setting results in a potentially large number of unused processor gray-scale levels. It is possible for the camera manufacturer to apply an unspecified gain factor of 2-4x, which might not be obvious to the user, and although this practice does boost the signal to utilize the full bit depth of the ADC, it produces increased digitization noise as the number of electrons constituting each gray level step is reduced.

The need for high bit depth in CCD cameras might be questioned in view of the fact that display devices such as computer monitors and many printers utilize only 8-bit processing, providing 256 gray levels, and other printed media as well as the human eye may only provide 5-7 bit discrimination. In spite of such low visual requirements, high bit-depth, high dynamic range camera systems are always advantageous, and are necessary for certain applications, particularly in fluorescence microscopy. When processing ratiometric or kinetic imaging data in quantitative investigations, larger numbers of gray levels allow light intensities to be more accurately determined. Additionally, when multiple image-processing operations are being performed, image data that are more precisely resolved into many gray level steps can withstand a greater degree of mathematical manipulation without exhibiting degradation as a result of arithmetic rounding-off errors.

A third advantage of high-bit imaging systems is realized when a portion of a captured image is selected for display, and the region of interest spans only a portion of the full image dynamic range. To optimize representation of the limited dynamic range, the original number of gray levels is typically expanded to occupy all 256 levels of an 8-bit monitor or print. Higher camera bit depth results in a less extreme expansion, and correspondingly less image degradation. As an example, if a selected image region spans only 5 percent of the full intrascene dynamic range, this represents over 200 gray levels of the 4096 discriminated by a 12-bit processor, but only 12 steps with an 8-bit (256 levels) system. When displayed at 256 levels on a monitor, or printed, the 12-level image expanded to this extent would appear pixelated, and exhibit blocky or contoured brightness steps rather than smooth tonal gradations.

Color CCD Image Sensors

Although CCDs are not inherently color sensitive, three different strategies are commonly employed to produce color images with CCD camera systems in order to capture the visual appearance of specimens in the microscope. Earlier technical difficulties in displaying and printing color images are no longer an issue, and the increase in information provided by color can be substantial. Many applications, such as fluorescence microscopy, the study of stained histology and pathology tissue sections, and other labeled specimen observations using brightfield or differential interference contrast techniques rely on color as an essential image component. The acquisition of color images with a CCD camera requires that red, green, and blue wavelengths be isolated by color filters, acquired separately, and subsequently combined into a composite color image.

Each approach utilized to achieve color discrimination has strengths and weaknesses, and all impose constraints that limit speed, lower temporal and spatial resolution, reduce dynamic range, and increase noise in color cameras compared to gray-scale cameras. The most common method is to blanket the CCD pixel array with an alternating mask of red, green, and blue (RGB) microlens filters arranged in a specific pattern, usually the Bayer mosaic pattern. Alternatively, with a three-chip design, the image is divided with a beam-splitting prism and color filters into three (RGB) components, which are captured with separate CCDs, and their outputs recombined into a color image. The third approach is a frame-sequential method that employs a single CCD to sequentially capture a separate image for each color by switching color filters placed in the illumination path or in front of the imager.

The single-chip CCD with an adherent color filter array is used in most color microscopy cameras. The filter array consists of red, green, and blue microlenses applied over individual pixels in a regular pattern. The Bayer mosaic filter distributes color information over four-pixel sensor units that include one red, one blue, and two green filters. Green is emphasized in the distribution pattern to better conform to human visual sensitivity, and dividing color information among groups of four pixels only modestly degrades resolution. The human visual system acquires spatial detail primarily from the luminance component of color signals, and this information is retained in each pixel regardless of color. Visually satisfying images are achieved by combining color information of lower spatial resolution with the high-resolution monochrome structural details.

A unique design of single-CCD color cameras improves spatial resolution by slightly shifting the CCD between images taken in sequence, and then interpolating among them (a technique known as pixel-shifting), although image acquisition is slowed considerably by this process. Another approach to individual pixel masking is to rapidly move an array of color microlenses in a square pattern immediately over the CCD surface during photon collection. Finally, a recently introduced technology combines three photoelectron wells into each pixel at different depths for discrimination of photon wavelength. Maximum spatial resolution is retained in these strategies because each pixel provides red, green, and blue color information.

The three-chip color camera combines high spatial resolution with fast image acquisition, allowing high frame rates suitable for rapid image sequences and video output. By employing a beam splitter to direct signal to three filtered CCDs, which separately record red, green, and blue image components simultaneously, very high capture speeds are possible. However, because the light intensity delivered to each CCD is substantially reduced, the combined color image is much dimmer than a monochrome single-chip image given a comparable exposure. Gain may be applied to the color image to increase its brightness, but signal-to-noise ratio suffers, and images exhibit greater apparent noise. Spatial resolution attained by three-chip cameras can be higher than that of the individual CCD sensors if each CCD is offset by a subpixel amount relative to the others. Since the red, green, and blue images represent slightly different samples, they can be combined by the camera software to produce higher-resolution composite images. Many microscopy and other scientific applications that require high spatial and temporal resolution benefit from the use of triple-CCD camera systems.

Color cameras referred to as frame-sequential are equipped with a

motorized filter wheel or liquid-crystal tunable filter (LCTF) to

sequentially expose red, green, and blue component images onto a single

CCD. Because the same sensor is used for separate red, green, and blue

images, the full spatial resolution of the chip is maintained, and image

registration is automatically obtained. The acquisition of three frames

in succession slows the process of image acquisition and display, and

proper color balance often requires different integration times for the

three colors. Although this camera type is not generally appropriate for

high-frame-rate acquisition, the use of rapidly-responding liquid-crystal

tunable filters for the R-G-B sequencing can increase the operation speed

substantially. The polarization sensitivity of LCTFs must be considered

in some applications, as they only transmit one polarization vector, and

may alter the colors of birefringent specimens viewed in polarized

light.